Have you ever wondered what drives power your Google searches, store your YouTube videos, and handle millions of Gmail messages? This question touches the heart of modern infrastructure design and reveal Google’s clever approach to data storage.

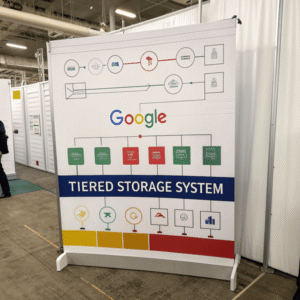

Google uses both SSDs and HDDs in their servers, employing a strategic tiered storage approach that optimizes performance, cost, and capacity based on specific workload requirements.

ThisThis balanced storage strategy isn’t random. It reflects decades of infrastructure evolution[^1] and billions of dollars in optimization research

[^1]: Exploring infrastructure evolution provides insights into technological advancements and their implications for future developments.

isn’t random. It reflects decades of infrastructure evolution and billions of dollars in optimization research. Let me walk you through how Google makes these critical storage decisions.

Do Google servers use HDD or SSD?

Google servers use both technologies in a carefully orchestrated mix. They use both SSD and Google servers use both technologies in a carefully orchestrated mix. They use both SSD[^1] and HDD within their infrastructure. They have different configurations of hardware that certain workloads are targeted towards.

[^1]: Explore the benefits of SSDs in server setups, including speed and reliability, to enhance your understanding of modern data storage.

within their infrastructure. They have different configurations of hardware that certain workloads are targeted towards.

Google’s storage philosophy centers on matching the right storage technology to the right use case. SSDs handle high-performance, low-latency workloads, while HDDs manage bulk storage requirements cost-effectively.

The company’s approach has evolved significantly since its early days. Initially, the index was being served from hard disk drives, as is done in traditional information retrieval (IR) systems. Soon they found that they had enough servers to keep a copy of the whole index in main memory (although with low replication or no replication at all), and in early 2001 Google switched to an in-memory index system.

Google’s storage decisions reflect their core infrastructure principles. They pick hardware that maximizes performance/price, not absolute performance. Pick hardware that has high thoroughput over high latency. This philosophy drives their Pick hardware that has high thoroughput[^1] over high latency. This philosophy drives their mixed storage approach.

[^1]: Understanding high thoroughput can help you choose the right hardware for performance.

The company uses different storage types for different functions:

HDDs for Bulk Storage

According to IDC, stored data will increase 17.8% by 2024 with HDD as the main storage technology. Google leverages HDDs primarily for:

- Cold storage and archival data

- Large dataset storage for AI training

- Backup and replication systems

- Content storage for services like YouTube

SSDs for High-Performance Tasks

Google does make extensive use of SSDs, but it uses them primarily for high-performance workloads and caching, which helps disk storage by shifting seeks to SSDs. SSDs power:

- Search index servers requiring immediate access

- Database operations with strict latency requirements

- Live service functions needing instant response times

- Caching layers across their infrastructure

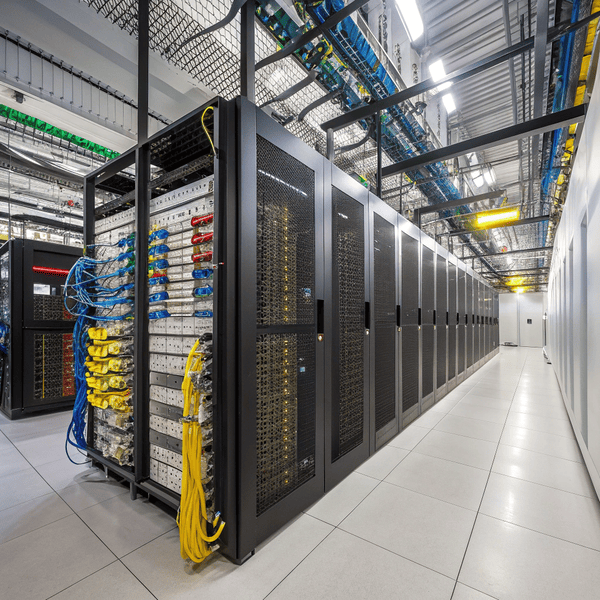

What server hardware does Google use?

Google’s server hardware philosophy breaks from traditional enterprise approaches. They use low-reliability consumer hardware and make up for it with fault-tolerant software. This strategy allows massive scale at reduced costs.

Google designs custom server hardware optimized for their specific workload patterns, prioritizing efficiency and density over individual component reliability.

The historical evolution shows their commitment to custom solutions. The original hardware (circa 1998) that was used by Google when it was located at Stanford University included: Sun Microsystems Ultra II with dual 200 MHz processors, and 256 MB of RAM. Compare this to today’s infrastructure serving 2.5 million servers according to 2016 estimates.

Google’s hardware design principles include:

Custom Motherboards and Components

Google designs their own motherboards and carefully selects components. They work with suppliers to audit and verify security properties of components they use.

Titan Security Chips

Google deploys custom Titan security chips across their infrastructure. These hardware security chips serve as a hardware root of trust, identifying and authenticating legitimate Google devices at the hardware level.

Optimized Form Factors

The current 3.5" HDD geometry inherited its size from the PC floppy disk. An alternative form factor should yield a better total cost of ownership. Google has pushed for storage innovations better suited to data center deployment patterns.

Power Efficiency Focus

They pick hardware that maximizes performance/price, not absolute performance. This includes optimizing for power efficiency and cooling requirements across their global data center network.

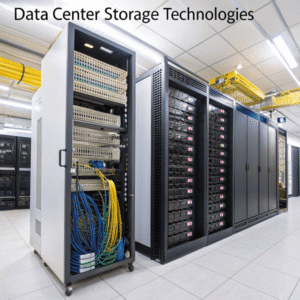

Do data centers use SSD or HDD?

The answer varies significantly across different data center operators, but trends show a strategic mix favoring HDDs for capacity and SSDs for performance-critical applications.

Modern data centers, including Google’s, use tiered storage architectures that combine HDDs for bulk storage with SSDs for high-performance applications, optimizing both cost and performance.

Industry data reveals the scale of this approach. SSD is projected to represent only 12% of the bits in data centers in 2024 according to IDC. This means HDDs still handle the vast majority of capacity requirements.

Several factors drive data center storage decisions:

Cost Considerations

The economic reality remains stark. According to Google, the cost per GB remains too high. More importantly, growth rates in capacity per dollar between disks and SSDs are relatively close. This cost differential means HDDs continue dominating bulk storage applications.

Workload-Specific Requirements

SSD is significantly faster and has more predictable performance than HDD. In a SSD is significantly faster and has more predictable performance than HDD. In a Bigtable cluster, SSD storage[^1] delivers significantly lower latencies for both reads and writes than HDD storage.

[^1]: Exploring this link will provide insights into the advantages of SSD storage, including speed and performance benefits.

, SSD storage delivers significantly lower latencies for both reads and writes than HDD storage. However, you shouldn’t consider using HDD storage unless you’re storing at least 10 TB of data and your workload is not latency-sensitive.

Performance vs. Capacity Trade-offs

Different use cases require different storage approaches:

| Storage Type | Best For | Performance | Cost per TB |

|---|---|---|---|

| HDD | Cold storage, backups, archives | Good sequential | Lowest |

| SATA SSD | General purpose, databases | High random I/O | Medium |

| NVMe SSD | High-performance computing, AI | Highest performance | Highest |

Future Trends

Early movers who have the vision to pay a higher price per bit and lock up supply of high capacity SSDs will get the TCO benefits over their competition and be able to drive their storage up with less burden on data center power, cooling, and floor space.

Google’s approach demonstrates that successful data center storage isn’t about choosing one technology over another. Instead, it requires understanding workload patterns, cost structures, and performance requirements to create optimized storage tiers. To lessen the effects of unavoidable hardware failure, software is designed to be fault tolerant. Thus, when a system goes down, data is still available on other servers, which increases reliability.

This multi-tiered approach allows Google to serve billions of users while maintaining both performance and cost efficiency. Their storage strategy continues evolving as new technologies emerge and workload patterns change, but the fundamental principle remains: match storage technology to specific use case requirements rather than applying one-size-fits-all solutions.

Conclusion

Google’s mixed storage approach demonstrates that successful infrastructure requires strategic technological choices rather than absolute preferences, balancing performance, cost, and reliability across their global operations.