Server farms need to balance capacity, cost, and performance in an ever-growing data landscape. Which storage media is winning the race today: Server farms need to balance capacity, cost, and performance in an ever-growing data landscape. Which storage media is winning the race today: HDDs or SSDs[^1]?

[^1]: Discover the benefits of SSDs and how they can enhance performance and efficiency in server farms.

or SSDs?

Most server farms still rely heavily on HDDs for bulk data storage due to their lower cost per gigabyte, but SSDs are rapidly gaining ground for performance-critical tasks and caching in modern server environments.[1][2][13]

Rising performance demands, new applications like AI, and the relentless growth of stored data have kept both HDD and SSD technologies evolving. Let’s break down what’s actually happening in server farms today and why.

Do most servers use HDD or SSD?

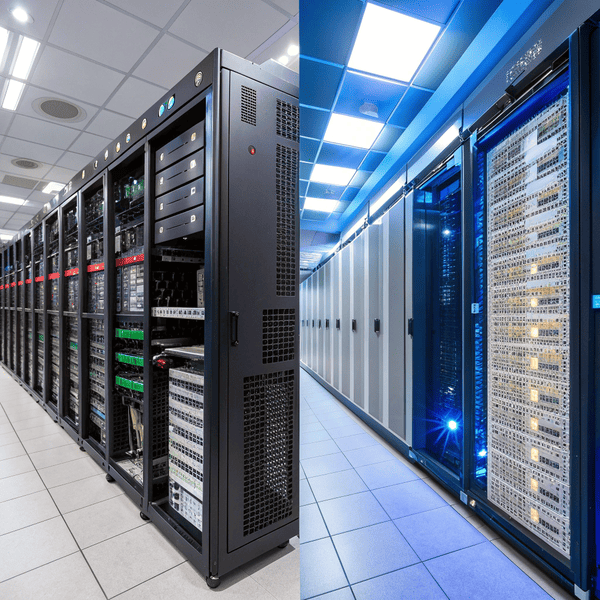

Modern data centers and server farms have a mix of storage types, but what’s deployed most often?

Most servers in data centers still use HDDs for mass storage, but SSDs are standard in new, high-performance servers and as cache/tiered storage. The trend is accelerating toward hybrid or all-flash arrays for speed-sensitive workloads.[1][2][12][13]

HDDs, with their low cost per terabyte and mature technology, remain the mainstay for large-scale storage—especially in server farms and hyperscale data centers where petabytes of data are stored.[1][2] The sheer price difference keeps bulk archives, streaming media, and secondary backups firmly on spinning disks.

However, However, SSD adoption is moving quickly. Servers tasked with running databases, transaction processing, virtualization, or workload caching[^1] increasingly ship with SSDs

[^1]: This resource will explain the significance of workload caching in optimizing server operations.

is moving quickly. Servers tasked with running databases, transaction processing, virtualization, or workload caching increasingly ship with SSDs. Their performance—zero seek time, extremely high IOPS, much lower latency—enables a level of responsiveness that spinning platters can’t match.[6][12][13]

Still, most “general use” servers will feature a hybrid approach: SSDs for boot, caching, or hot data, and HDDs for capacity. In new deployments, especially in cloud and high-transaction workloads, all-flash is becoming common, though cost and capacity needs keep HDDs from disappearing.[13]

| Storage Role | Primary Tech | Why Chosen |

|---|---|---|

| Mass / archive storage | HDD | Lowest $/GB, huge capacities |

| High IOPS databases/caching | SSD | Speed, low latency |

| Boot/system disks | SSD | Fast startup, reliability |

| Mixed/hybrid arrays | Both (tiered) | Optimize cost/performance |

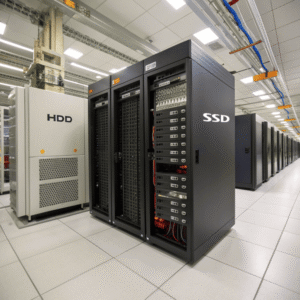

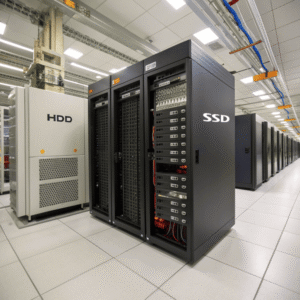

Why are SSDs not used in servers?

If SSDs are much faster, what holds back universal adoption in servers and server farms?

SSDs remain more expensive per gigabyte, and while their endurance and reliability have improved, economics of mass storage still favor HDDs for much bulk data. Certain enterprise SSDs also require specific interface compatibility or advanced power-loss protection.[1][9][12]

Cost is the single biggest factor: Cost is the single biggest factor: enterprise SSDs, especially in large capacities, still cost about five to ten times as much per terabyte as hard drives[^1]

[^1]: This resource will provide insights into the cost-effectiveness and performance differences between hard drives and SSDs.

, especially in large capacities, still cost about five to ten times as much per terabyte as hard drives.[1][2] A server farm that needs to store dozens or hundreds of petabytes often cannot justify moving all data to SSD unless absolutely necessary—especially for “cold” or rarely accessed files.

Capacity also plays a role. While enterprise SSDs now reach up to 100TB, HDDs are available at up to 36TB and remain the preferred option for pure capacity expansion[6].

Endurance is much improved in modern SSDs, but write-intensive or archival workloads (logs, surveillance, backups) can still put pressure on write cycles, making HDDs a safer choice for certain data profiles—though this gap narrows with each SSD generation.[6][13]

There are also interface and infrastructure issues: legacy servers may only support SATA/SAS HDDs, and SSDs are best leveraged with newer NVMe/PCIe interfaces for maximum performance.[1][3][6] Enterprise SSDs often require proper power loss protection, and not all data centers are ready for an “all-flash” design.

Finally, not every workload benefits from SSD speed. For sequential or bulk read/write (media streaming, backups), the bottleneck may not be the disk, making the investment harder to justify.[9][13]

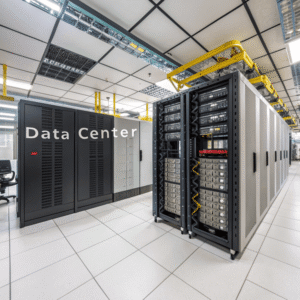

Do data centers use HDD or SSD?

What does the reality look like inside a modern data center?

Most data centers use both HDDs and SSDs. HDDs dominate for bulk storage due to lower costs. SSDs are standard for boot drives, caching, and applications needing high performance. A tiered storage model is common.[1][2][12][13]

In real-world data centers, it’s about deploying the right storage tech for each application. HDDs are unbeatable for scale and cost—enabling data centers to store In real-world data centers, it’s about deploying the right storage tech for each application. HDDs[^1] are unbeatable for scale and cost—enabling data centers to store exabytes economically

[^1]: Explore this link to understand why HDDs are essential for cost-effective and scalable data storage in data centers.

economically. SSDs, meanwhile, are essential for any latency-sensitive, high-transaction, or random-access workload. From boot drives (for OS images) to transactional databases and VM storage, SSDs boost speed and productivity.[12][13]

Tiered storage is the standard:

- “Hot” or frequently accessed data: SSD/NVMe

- “Warm” or occasionally accessed: hybrid HDD/SSD

- “Cold” or archive: HDD

Major cloud providers (AWS, Google, Azure) offer various storage classes (EBS, S3, etc.) that allow selecting fast SSD-backed, throughput-focused HDD, or archival cold storage for different needs.

This balance reflects both technical and economic realities—future trends may favor SSDs as prices drop and capacities soar, but HDDs remain indispensable for the largest workloads.[1][13][12]

| Storage Tier | Tech Used | Typical Workload | Reason |

|---|---|---|---|

| Hot (high IOPS) | SSD/NVMe | DBs, VMs, caching | Performance, low latency |

| Warm | HDD/SSD | Active archives, backups | Cost, moderate access |

| Cold | HDD | Archives, logs, DR | Lowest cost, rarely needed |

Conclusion

Server farms today mostly use HDDs where capacity and cost matter, with SSDs increasingly adopted where speed and reliability are vital. Hybrid models are the norm, but the balance keeps changing.[1][2][12][13]